This is the fourth installment in a ten-part series.

- All Communication is Manipulation

- All Communication is Behavioral Manipulation

- Consensual and Nonconsensual Manipulation

- ACiM is a Natural Extension of Cybernetic Theory

- Response to Simpolism on ACiM

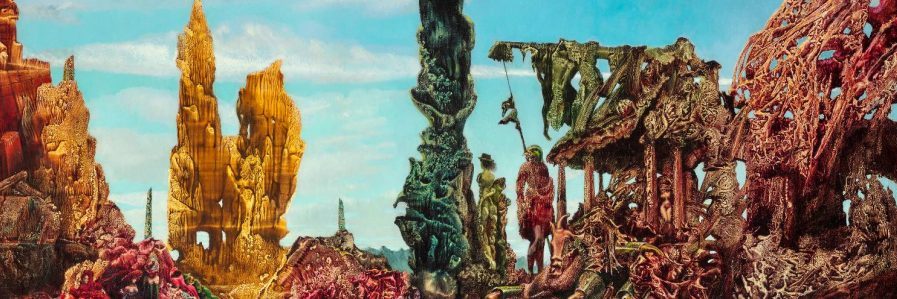

- A Landscape of Communication

- All Is Well E28: Functional Railroading

- All Is Well E28: “My Hands Are Tied”

- Girls S2: Lip Service

- Against Self Expression

At the core of cybernetics is the idea of agents—synthetic and organic, human and machine and animal—as servomechanisms, or “servos.” Servos are machines which use feedback to correct their actions, using some sort of sensory system and built-in encoder to monitor their environment, and ensure their outputs achieve the desired effects (i.e. leading to the desired inputs). Thus, an anti-aircraft system fires, monitors whether planes are hit, or where the shell explodes, and then uses this information to adjust where it is firing.

In other words, as agents we exist in an environment and embody desires (or goals). In order to realize these desires, we must alter our environment. In order to alter the environment effectively, we must monitor how that environmpent—experienced through sensory input—changes depending on our behavioral outputs. By noting the discrepancy between our anticipation and the reality of the environment, post-behavior, we fine-tune our interventions until the anticipation-actuality gap is minimal. In cybernetics, this sensory input is referred to as negative feedback.

(This general model is strikingly similar to the predictive model advanced by Andy Clark and Karl Friston, where feedback is often referred to as “prediction error.”)

When it comes to communication, however, we seem hesitant to extend the servo model and properly conceptualize communication as a control system. Norbert Wiener would write: “When I give an order to a machine, the situation is not essentially different from… when I give an order to a person.”[1] But while cyberneticians understood well that orders served to manipulate receiver behavior, it seems—to the best of my knowledge, although I welcome any tips to the contrary—to have failed to fully recognize the way that all representational acts altered a machine’s inputs, and therefore cybernetically its outputs. Moreover, it failed to fully recognize that this alteration was the purpose of representation to begin with.

Insofar as an anti-aircraft spotter intends his gunner to fire, when he says “Fire,” or intends his gunner to move the gun to a specific coordinate when he says, “Move the gun to the coordinate,” he also intends these effects when he merely relays—represents—the location of the aircraft, or when he says “the bogie is in your sights,” etc. The imperative mood is merely a mood which makes explicit the behavioral alteration which guides all representation. It has far more to do with whether the manipulation carried by the communication is consensual or non-consensual, than whether the communication carries a manipulation to begin with.

In other words, cybernetics (AFAICT) failed to recognize the way behavioral manipulation extended far beyond the imperative mood and into all communication—an insight which would likely not have eluded it, had it the extensive ethological literature existed then, which is presently available to the modern commentator. And yet this view of communication in no way clashes with the fundamental principles of cybernetics; I think it fair to say that the view of communication as manipulation is a fundamentally cybernetic view— not just in casting language as a control system, but in understanding linguistic acts as conditioned by a historical training regime of feedback.

I am defining communication then—and expression and representation generally—as the intentional alteration by a servo of another servo’s inputs (feedback) in order to alter and control its outputs (behavior). @joXn mocks, on Twitter: “Did you realize that when you interact with someone in any way you literally change their sensory inputs? Makes you think.” But while his tone is dismissive, the alteration of sensory inputs is liable to alter behavioral outputs à la “difference which makes a difference”; moreover, I believe that when we say a communicative act “has a point,” or when we find it “interesting” (and thus it updates our model of reality, to receive it), we are saying that our sensory inputs have been altered in a way which will alter behavioral outputs, if at least in some hypothetical and unforeseen situation down the line. (But often in the moment too, in the interaction itself, we change our behavior, become friendlier when we are given information which will help us down the line.) As I have argued extensively, this is the view of communication—as geared expressly for the alteration of other agents’ behavior—which follows naturally from not just animal signaling but Schelling’s bargaining theory and studies of communication in group coordination processes (see e.g. Edwin Hutchins, Cognition in the Wild):

When a quartermaster on a ship gives a command to a ship’s mate, it is expressly with the purpose of that mate executing the command, i.e. performing some resulting action… [And in] a high-stakes poker game, between competent players—the purpose of intentional expressive acts is the manipulation of other players’ tactics or choices.

Stigmergy, here, is interesting because it refers to communication systems where no extra expressive or representational work is performed; the individual action of any given individual is necessary and sufficient as “writing” to the next individual, who “reads” the action’s trace or product in order to decide his own action.

One bullet we must bite with this view: that communication is not special to human beings or even animals, but is true of all intelligence, including machine intelligence. We can accurately say, I think, that organic intelligence evolved (was selected to possess) the ability to alter its own behavioral outputs on the basis of informational inputs (feedback), and that machine intelligence was designed with this ability as foundational to it. We communicate with computers (feed it inputs) and computers communicate with us (feed us inputs). Any machine whose behavior could not be altered by inputs, or who could not alter our behavior through outputs, we would not consider a computer.

One issue readers may find, in embracing this view of communication, is that, when we provide information, we often do not view it as manipulation, intended to railroad the receiver into some specific outcome, or even set of outcomes, but as an act that enables or empowers the receiver, who is able now to “freely choose” in a more informed way, his next act so that it furthers his own goals and interests, premised on the representation of reality he has received. In other words, the manipulation happens through an individual following his own best interests! The first catch lies in that last clause—premised on the representation received. His “freedom of choice,” and his optimization of the environment in service of desires, is founded on a perception of the world which in communicating, we have altered.

The second catch is that there is little evidence, and little reason to believe that human minds are not, basically, deterministic at their core. There are factorings of free will which are compatible with this picture, but I am not aware of a reputable position which holds that, given complete information and adequate computational power, the behavior of an individual could not theoretically be modeled with perfect fidelity moment-by-moment. While human minds embody a complex, unknowable, black-box determinism, we can imagine a much simpler agent—an elementary machine, or mathematical function—whose full set of input-output relations was known. In this case, could we plausibly claim that we were not manipulating its outputs, when we edit it inputs? I don’t think we can. The concepts of choice or free will may be pragmatically useful abstractions, but they cannot be a serious rebuttal to this view of communication between organisms-as-machines.

Last, I want to emphasize the etymology of “cybernetics”—a more appropriate discursive move than many etymological arguments, I think, given that its root was deliberately chosen by the field’s founder (Norbert Wiener). A case I have tried repeatedly to stress, in making the ACiM argument, is that we do not usually communicate with a particular behavioral outcome in mind, but rather, we steer interaction and interlocutor behavior in a general direction which we believe will more probably bring about desirable results, and away from directions which we believe will more probably bring about undesirable results. This being, by and large, the only way we can manipulate such complex systems as human beings. The origin of “cybernetics,” of course, is the Greek κυβερνήτης, for “steersman.”

[1] I am of the opinion that imperative statements can be functionally described as indicative statements that represent the desires of a requesting individual, to an individual who has an interest in fulfilling these desires. (E.g. because of a formal system of punishment that can be found in the military, or because one wishes to ingratiate themselves with the other, or because they are engaged in a relationship of reciprocity, etc.) Likewise, indicative sentences can be functionally described as imperatives to the receiver to believe the speaker’s utterance and update accordingly.

Leave a comment